Transient Imaging

How does the world look at a billion frames per second? We try to answer this question, and propose new ways of capturing the world, by developing techniques for imaging, reconstruction, and synthesis, where we break the assumption of infinite speed of light.

Transient imaging is a recently emerged field, which aims to break the traditional assumption in imaging of infinite speed of light. By leveraging the wealth of information of light transport at extreme temporal resolutions, novel techniques have been proposing showing movies of light in motion, allowing to see around corners or through highly-scattering media, or enabling material capture from a distance, to name a few. Our goal in this field is to develop new techniques allowing for effective capture and simulation of time-resolved light transport, as well as proposing new scene reconstruction techniques taking advantage of the unveiled information in the temporal domain.

Publications

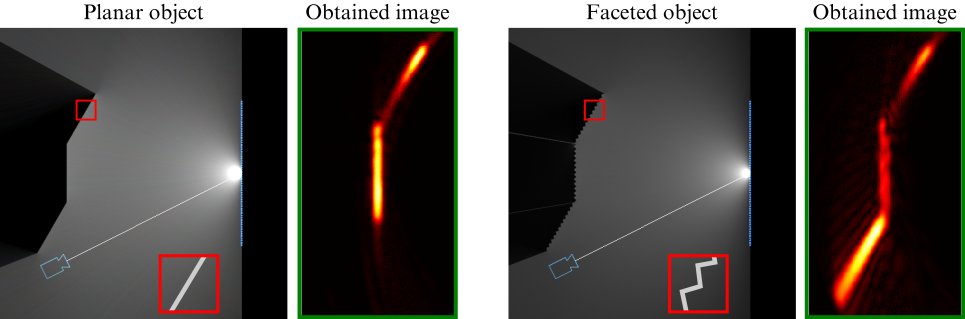

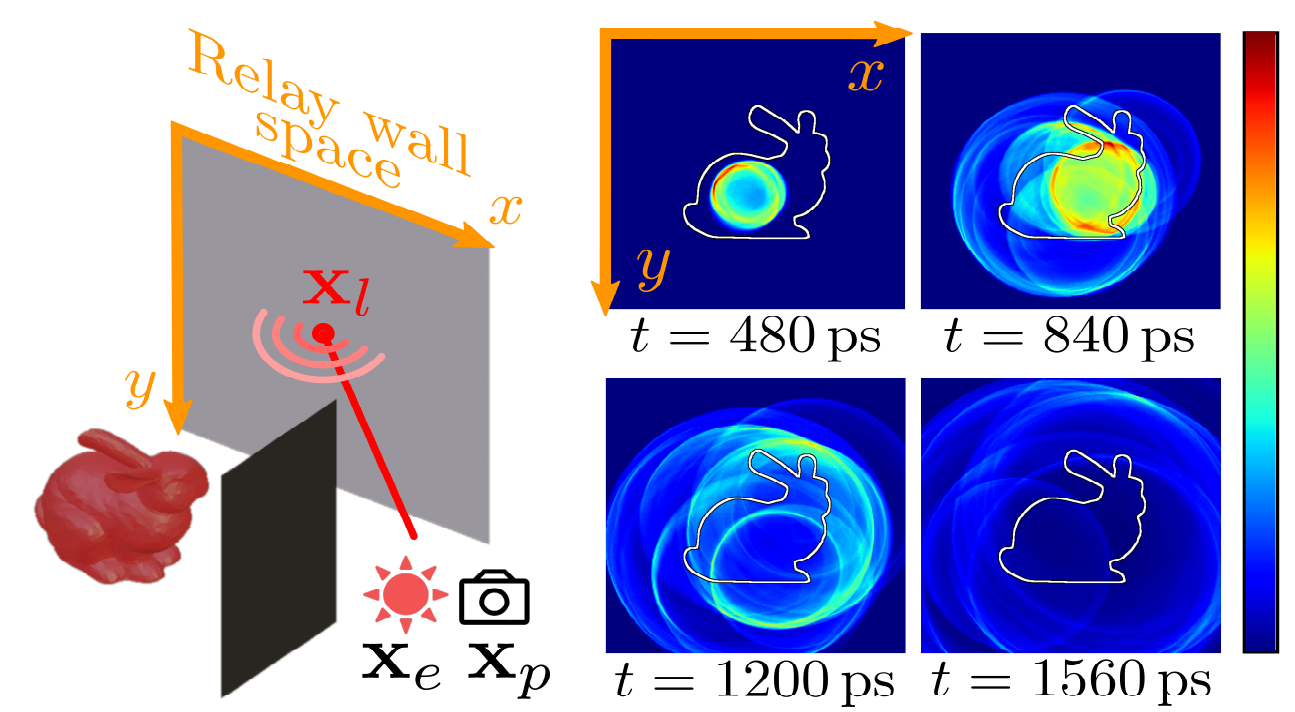

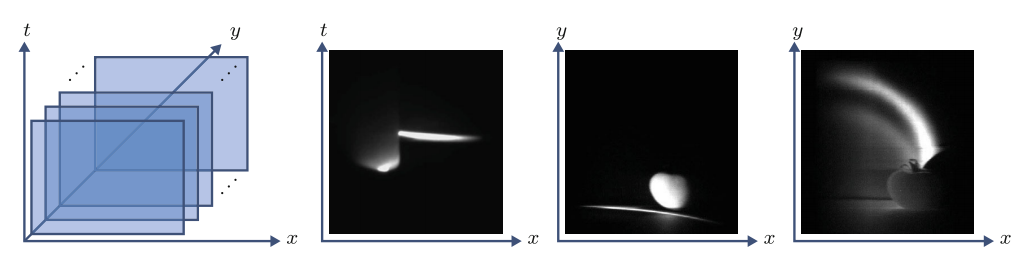

Looking Around Flatland: End-to-End 2D Real-Time NLOS Imaging

María Peña, Diego Gutierrez, Julio Marco

IEEE Transactions on Computational Imaging

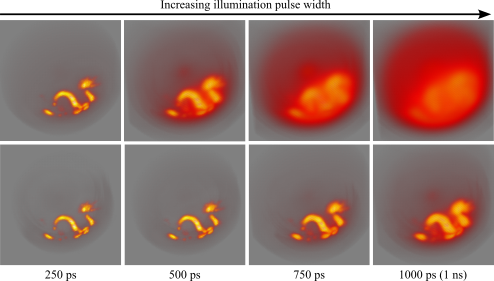

Abstract: Time-gated non-line-of-sight (NLOS) imaging methods reconstruct scenes hidden around a corner by inverting the optical path of indirect photons measured at visible surfaces. These methods are, however, hindered by intricate, time-consuming calibration processes involving expensive capture hardware. Simulation of transient light transport in synthetic 3D scenes has become a powerful but computationally-intensive alternative for analysis and benchmarking of NLOS imaging methods. NLOS imaging methods also suffer from high computational complexity. In our work, we rely on dimensionality reduction to provide a real-time simulation framework for NLOS imaging performance analysis. We extend steady-state light transport in self-contained 2D worlds to take into account the propagation of time-resolved illumination by reformulating the transient path integral in 2D. We couple it with the recent phasor-field formulation of NLOS imaging to provide an end-to-end simulation and imaging pipeline that incorporates different NLOS imaging camera models. Our pipeline yields real-time NLOS images and progressive refinement of light transport simulations. We allow comprehensive control on a wide set of scene, rendering, and NLOS imaging parameters, providing effective real-time analysis of their impact on reconstruction quality. We illustrate the effectiveness of our pipeline by validating 2D counterparts of existing 3D NLOS imaging experiments, and provide an extensive analysis of imaging performance including a wider set of NLOS imaging conditions, such as filtering, reflectance, and geometric features in NLOS imaging setups.

Zero-Phase Phasor Fields for Non-Line-of-Sight Imaging

Pablo Luesia-Lahoz, Talha Sultan, Forrest B. Peterson, Andreas Velten, Diego Gutierrez, Adolfo Muñoz

International Conference on Computational Photography 2025 (ICCP)

Abstract: Non-line-of-sight imaging employs ultra-fast illumination and sensing devices to reconstruct scenes outside their line of sight by analyzing the temporal profile of indirect scattered illumination on a secondary relay surface. Commonly, the NLOS methods transform the temporal domain into the frequency domain and operate on it, and then identify surface locations by locating the maxima in amplitude along the reconstruction volume. Phase information, which is virtual as it results from a Fourier transform, is very often discarded or ignored. We incorporate phase information into our novel Zero-Phase Phasor Fields imaging technique, which we derive for a confocal capture configuration. We show how, at positions that belong to the hidden geometry, we can ensure the phase is zero, so we can locate the hidden geometry with great precision by locating the zero crossings in the phase. This allows us to reconstruct at widely spaced locations and still achieve up to 125 micrometer depth precision, as our experimental validation shows with both synthetic and captured data, the latter publicly available. Moreover, the phase is robust to noise, as we demonstrate with decreasing signal-to-noise ratio using publicly available dataset captures of the same scene.

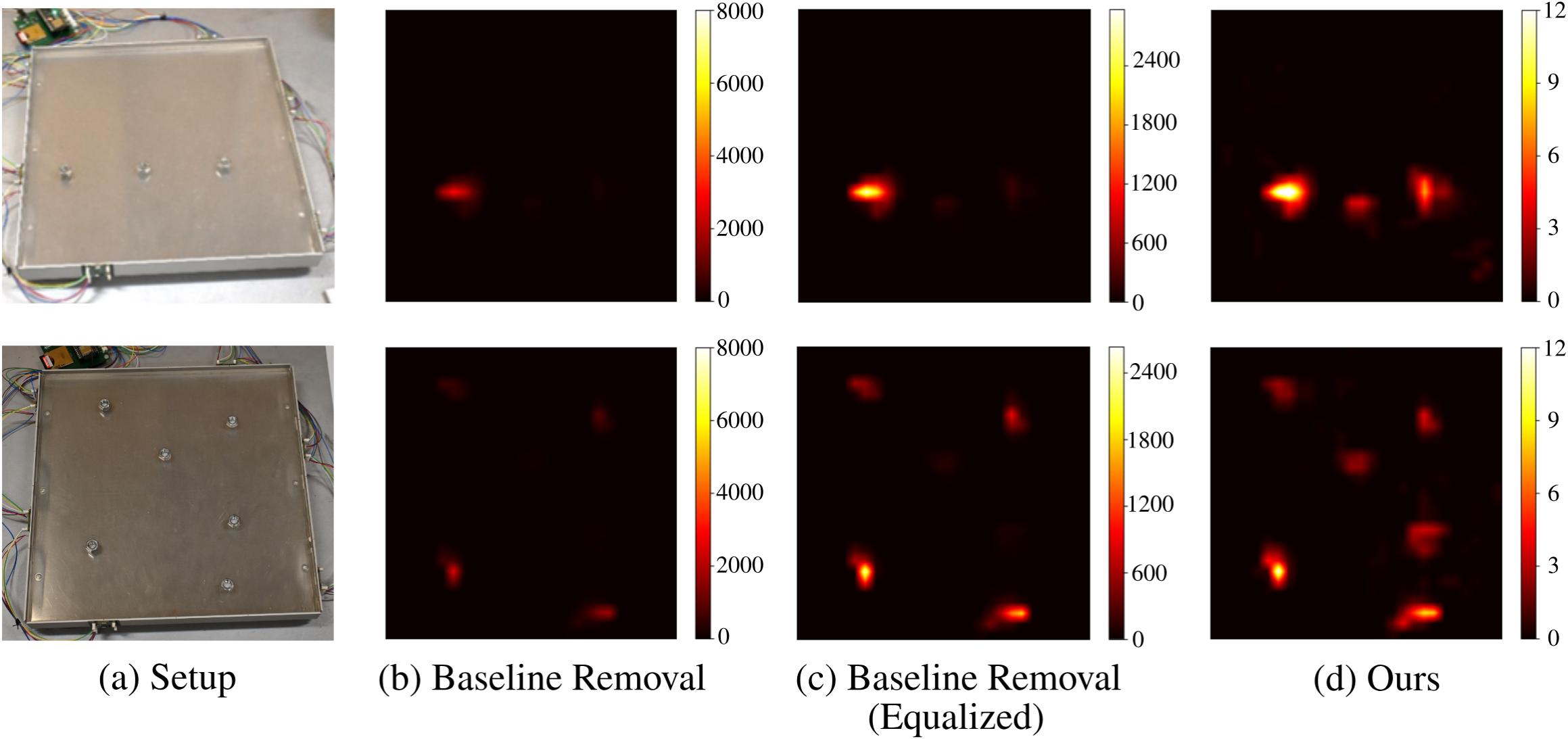

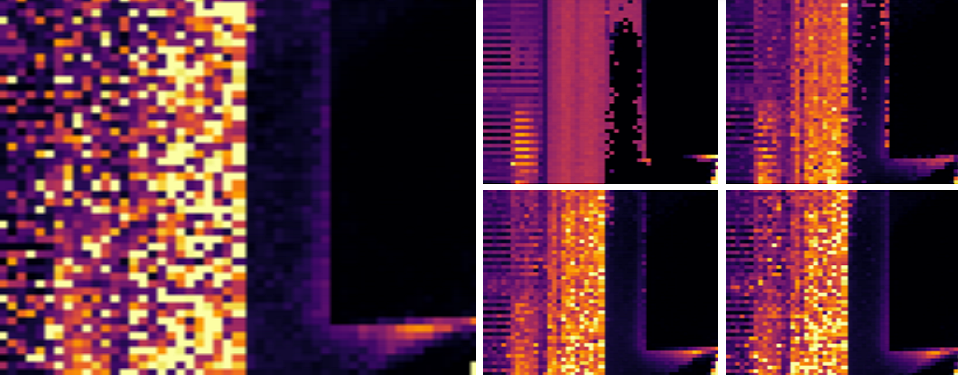

Time-of-flight signal processing for FTIR-based tactile sensors

Jorge García-Pueyo, Sergio Cartiel, Emmanuel Bacher, Martin Laurenzis, Adolfo Muñoz

Optics Express, Vol. 33, Issue 18

Abstract: Optical tactile sensors offer a promising avenue for advanced sensing and perception. We focus on frustrated total internal reflection (FTIR) tactile sensors that utilize time-of-flight (ToF) measurements. We analyze the complex behavior of ToF signals within optical waveguides in the time domain, where phenomena like internal reflections and scattering significantly influence light propagation, especially in the presence of touch. Leveraging this analysis, we develop a real-time processing algorithm that enhances FTIR tactile sensing capabilities, allowing for precise detection. We evaluate our algorithm on an OptoSkin sensor setup, demonstrating a significant improvement in multi-touch detection and contact shape reconstruction accuracy. This work represents a significant step towards high-resolution, low-cost optical tactile sensors, and advances the understanding of time-resolved light transport within waveguides and in scattering media.

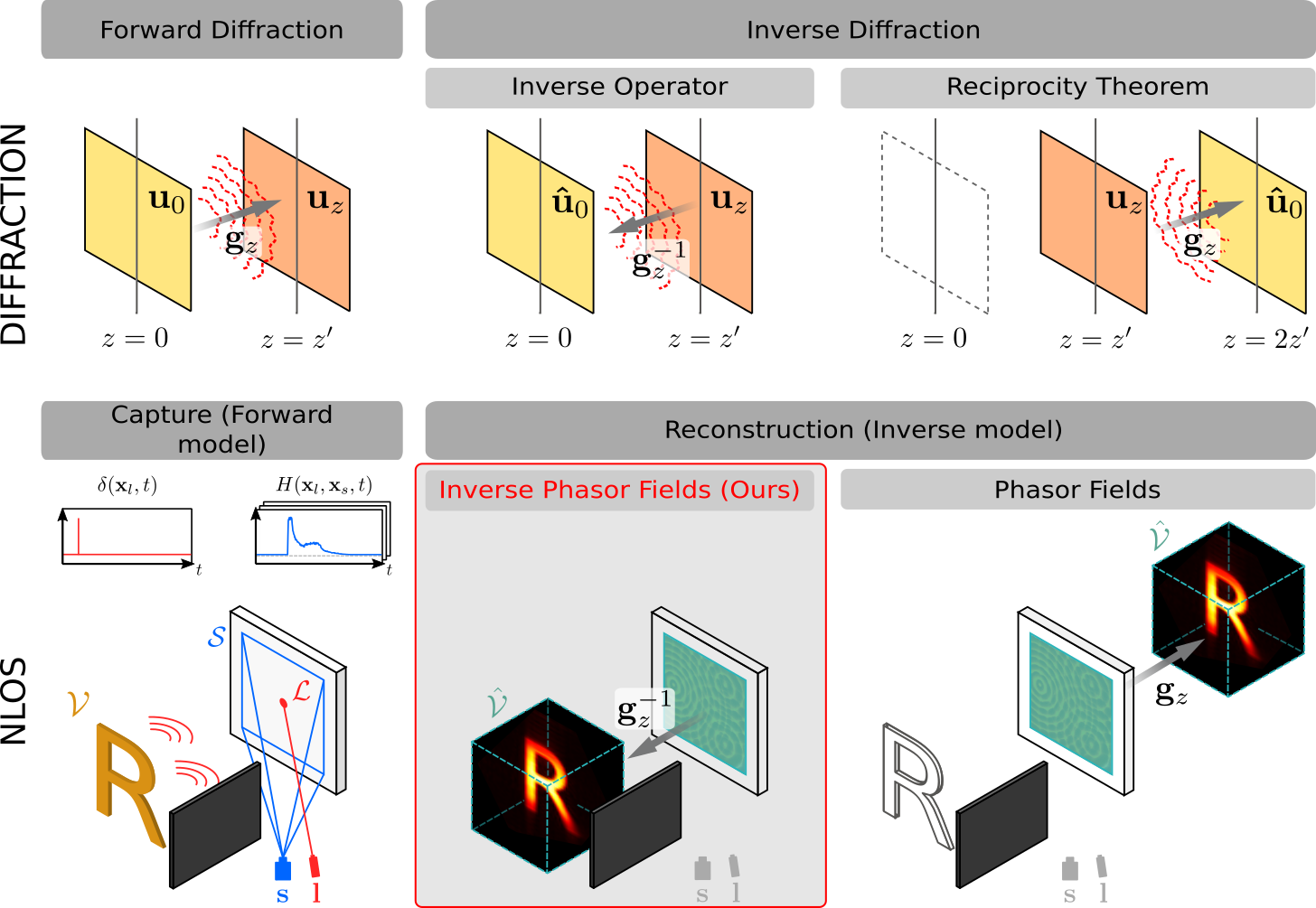

Forward and inverse diffraction in phasor fields

Jorge García Pueyo, Adolfo Muñoz

Optics Express, Vol. 33(5), 2025

Abstract: Non-line-of-sight (NLOS) imaging is an inverse problem that consists of reconstructing a hidden scene out of the direct line-of-sight given the time-resolved light scattered back by the hidden scene on a relay wall. Phasor fields transforms NLOS imaging into virtual LOS imaging by treating the relay wall as a secondary camera, which allows reconstruction of the hidden scene using a forward diffraction operator based on the Rayleigh-Sommerfeld diffraction (RSD) integral. In this work, we leverage the unitary property of the forward diffraction operator and the dual space it introduces, concepts already studied in inverse diffraction, to explain how Phasor Fields can be understood as an inverse diffraction method for solving the hidden object reconstruction, even though initially it might appear it is using a forward diffraction operator. We present two analogies, alternative to the classical virtual camera metaphor in Phasor Fields, to NLOS imaging, relating the relay wall either as a phase conjugator and a hologram recorder. Based on this, we express NLOS imaging as an inverse diffraction problem, which is ill-posed under general conditions, in a formulation named Inverse Phasor Fields, that we solve numerically. This enables us to analyze which conditions make the NLOS problem formulated as inverse diffraction well-posed, and propose a new quality metric based on the matrix rank of the forward diffraction operator, which we relate to the Rayleigh criterion for lateral resolution of an imaging system already used in Phasor Fields.

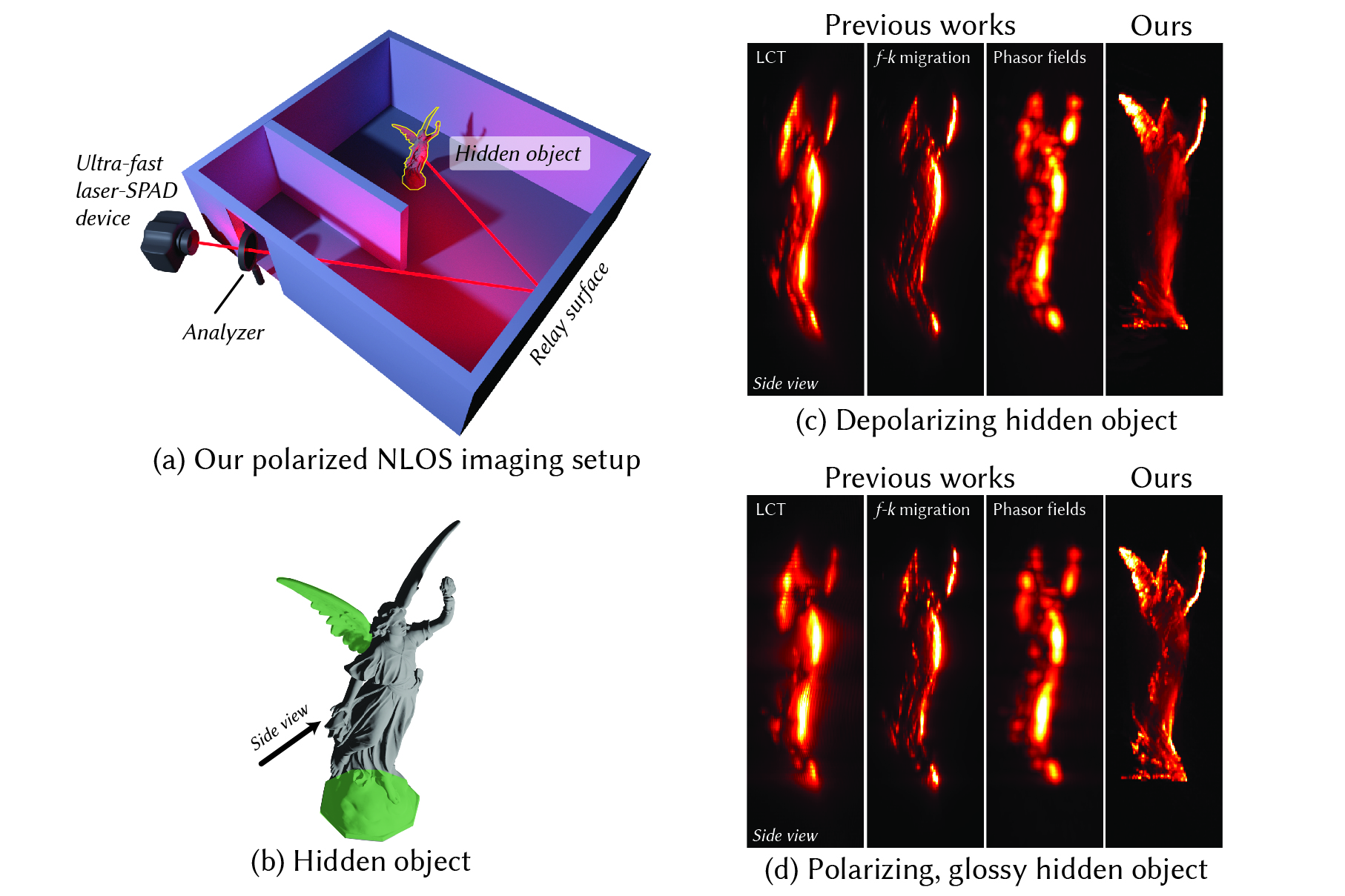

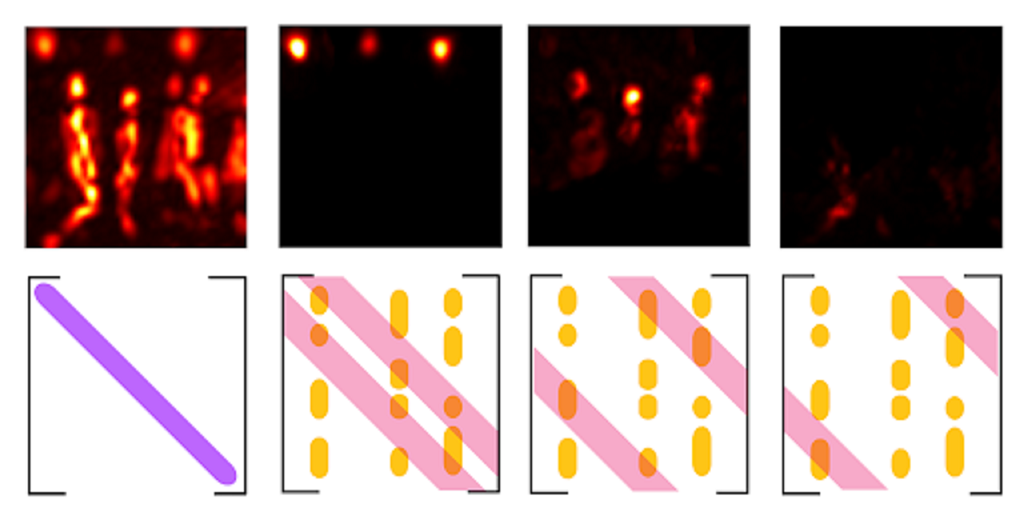

Time-Gated Polarization for Active Non-Line-Of-Sight Imaging

Oscar Pueyo-Ciutad, Julio Marco, Stephane Schertzer, Frank Christnacher, Martin Laurenzis, Diego Gutierrez, Albert Redo-Sanchez

SIGGRAPH Asia 2024

Abstract: We propose a novel method to reconstruct non-line-of-sight (NLOS) scenes that combines polarization and time-of-flight light transport measurements. Unpolarized NLOS imaging methods reconstruct objects hidden around corners by inverting time-gated indirect light paths measured at a visible relay surface, but fail to reconstruct scene features depending on their position and orientation with respect to such surface. We address this limitation (known as the extit{missing cone} problem) by capturing the polarization state of light in time-gated imaging systems at picosecond time resolution, and introducing a novel inversion method that leverages directionality information of polarized measurements to reduce directional ambiguities in the reconstruction. Our method is capable of imaging features of hidden surfaces inside the missing cone space of state-of-the-art NLOS methods, yielding fine reconstruction details even when using a fraction of measured points on the relay surface. We demonstrate the benefits of our method in both simulated and experimental scenarios.

Cohesive framework for non-line-of-sight imaging based on Dirac notation

Albert Redo-Sanchez, Pablo Luesia-Lahoz, Diego Gutierrez, Adolfo Muñoz

Optics Express, Vol. 32(6), 2024

Abstract: The non-line-of-sight (NLOS) imaging field encompasses both experimental and computational frameworks that focus on imaging elements that are out of the direct line-of-sight, for example, imaging elements that are around a corner. Current NLOS imaging methods offer a compromise between accuracy and reconstruction time as experimental setups have become more reliable, faster, and more accurate. However, all these imaging methods implement different assumptions and light transport models that are only valid under particular circumstances. This paper lays down the foundation for a cohesive theoretical framework which provides insights about the limitations and virtues of existing approaches in a rigorous mathematical manner. In particular, we adopt Dirac notation and concepts borrowed from quantum mechanics to define a set of simple equations that enable: i) the derivation of other NLOS imaging methods from such single equation (we provide examples of the three most used frameworks in NLOS imaging: back-propagation, phasor fields, and f-k migration); ii) the demonstration that the Rayleigh-Sommerfeld diffraction operator is the propagation operator for wave-based imaging methods; and iii) the demonstration that back-propagation and wave-based imaging formulations are equivalent since, as we show, propagation operators are unitary. We expect that our proposed framework will deepen our understanding of the NLOS field and expand its utility in practical cases by providing a cohesive intuition on how to image complex NLOS scenes independently of the underlying reconstruction method.

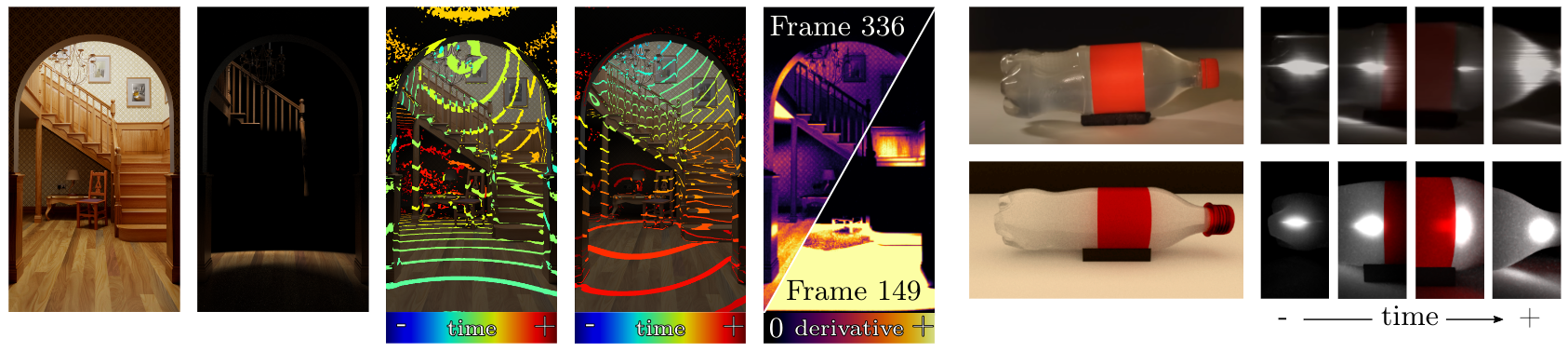

mitransient: Transient light transport in Mitsuba 3

Diego Royo, Miguel Crespo, Jorge García-Pueyo

International Conference on Computational Photography (ICCP) Poster, 2024

Abstract: mitransient is a rendering tool that simulates different sorts of time-resolved sensing devices (including time-gated and transient cameras), with easy-to-extend modules written in Python, leveraging the state-of-the-art technology of Mitsuba 3. We can simulate the interactions of light with complex materials and participating media, run in both CPU and GPU by using vectorized JIT compiled code, compute ray tracing queries with optimized acceleration structures, and compute derivatives including the temporal domain. We have published our code in GitHub and as a PyPi package for easy installation: pip install mitransient

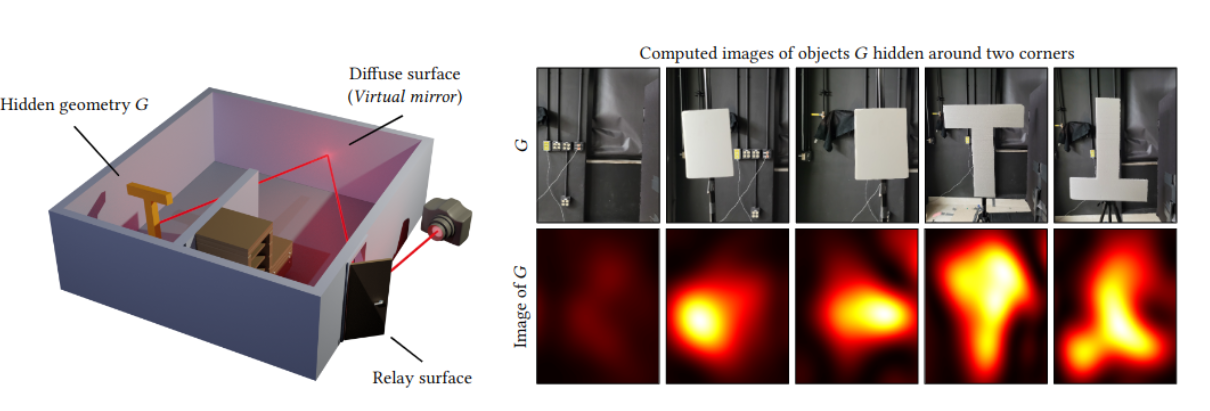

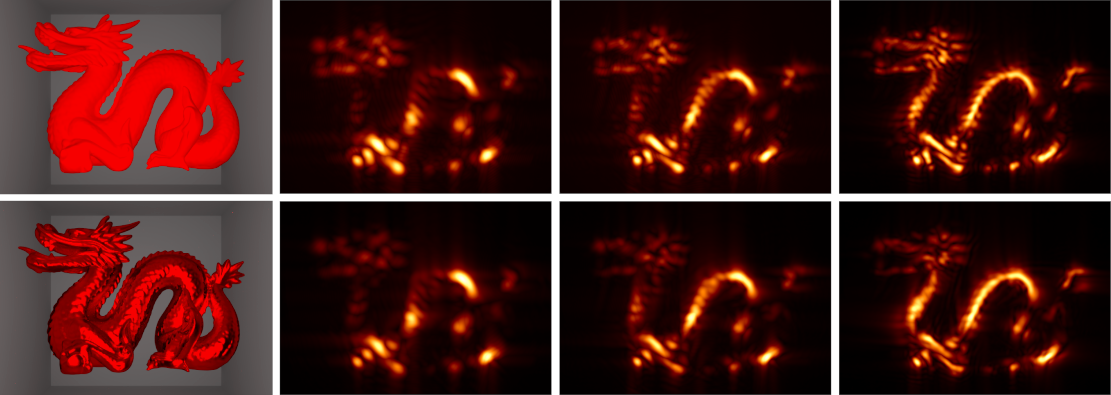

Virtual Mirrors: Non-Line-of-Sight Imaging Beyond the Third Bounce

Diego Royo, Talha Sultan, Adolfo Muñoz, Khadijeh Masumnia-Bisheh, Eric Brandt, Diego Gutierrez, Andreas Velten, Julio Marco

ACM Transactions on Graphics (Proc. SIGGRAPH 2023)

Abstract: Non-line-of-sight (NLOS) imaging methods are capable of reconstructing complex scenes that are not visible to an observer using indirect illumination. However, they assume only third-bounce illumination, so they are currently limited to single-corner configurations, and present limited visibility when imaging surfaces at certain orientations. To reason about and tackle these limitations, we make the key observation that planar diffuse surfaces behave specularly at wavelengths used in the computational wave-based NLOS imaging domain. We call such surfaces virtual mirrors. We leverage this observation to expand the capabilities of NLOS imaging using illumination beyond the third bounce, addressing two problems: imaging single-corner objects at limited visibility angles, and imaging objects hidden behind two corners. To image objects at limited visibility angles, we first analyze the reflections of the known illuminated point on surfaces of the scene as an estimator of the position and orientation of objects with limited visibility. We then image those limited visibility objects by computationally building secondary apertures at other surfaces that observe the target object from a direct visibility perspective. Beyond single-corner NLOS imaging, we exploit the specular behavior of virtual mirrors to image objects hidden behind a second corner by imaging the space behind such virtual mirrors, where the mirror image of objects hidden around two corners is formed. No specular surfaces were involved in the making of this paper.

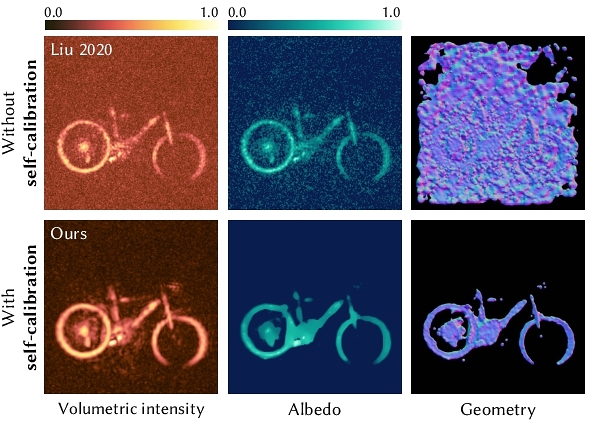

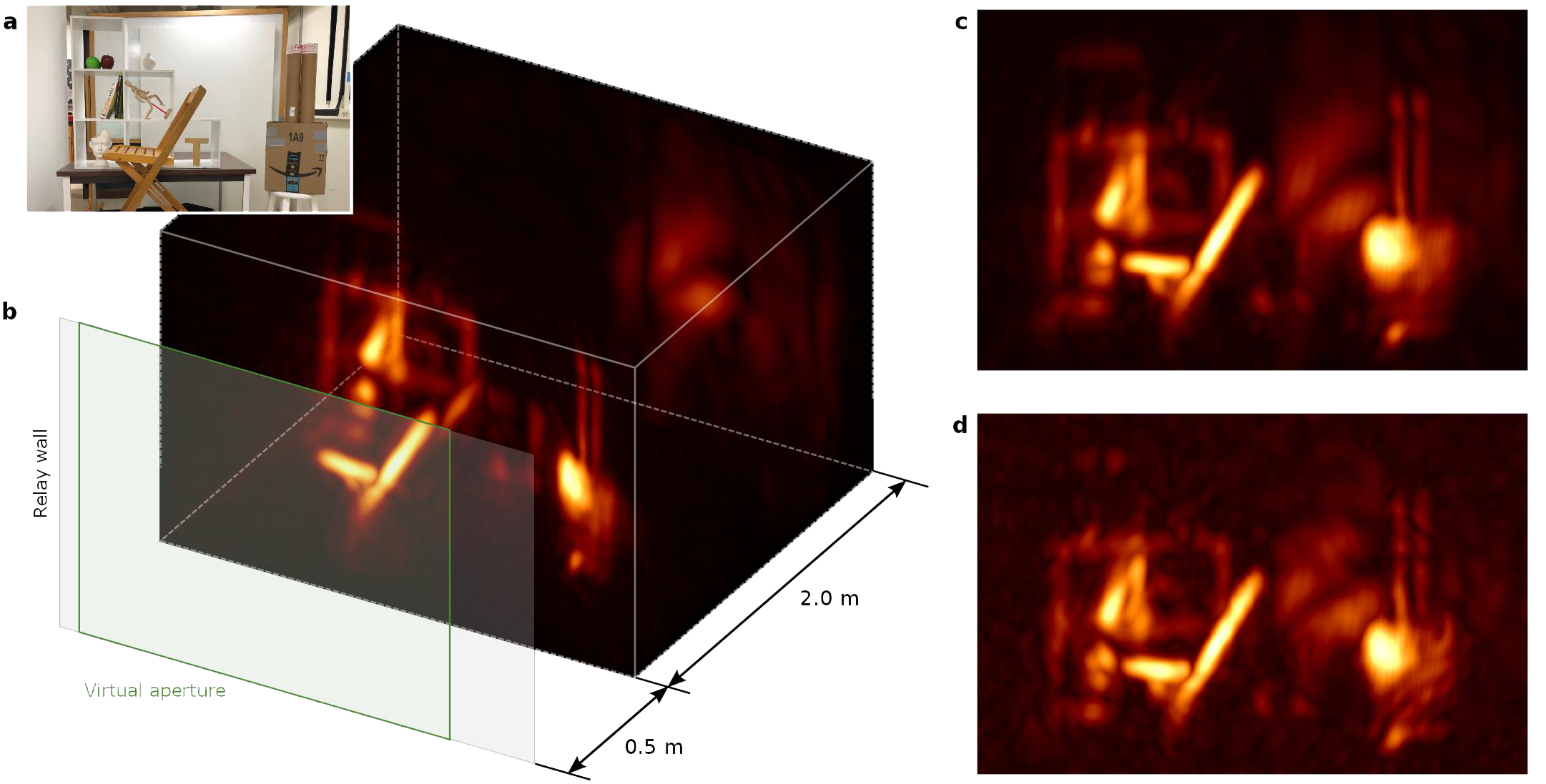

Self-Calibrating, Fully Differentiable NLOS Inverse Rendering

Kiseok Choi, Inchul Kim, Dongyoung Choi, Julio Marco, Diego Gutierrez, Min H. Kim

SIGGRAPH Asia 2023

Abstract: Existing time-resolved non-line-of-sight (NLOS) imaging methods reconstruct hidden scenes by inverting the optical paths of indirect illumination measured at visible relay surfaces. These methods are prone to reconstruction artifacts due to inversion ambiguities and capture noise, which are typically mitigated through the manual selection of filtering functions and parameters. We introduce a fully-differentiable end-to-end NLOS inverse rendering pipeline that self-calibrates the imaging parameters during the reconstruction of hidden scenes, using as input only the measured illumination while working both in the time and frequency domains. Our pipeline extracts a geometric representation of the hidden scene from NLOS volumetric intensities and estimates the time-resolved illumination at the relay wall produced by such geometric information using differentiable transient rendering. We then use gradient descent to optimize imaging parameters by minimizing the error between our simulated time-resolved illumination and the measured illumination. Our end-to-end differentiable pipeline couples diffraction-based volumetric NLOS reconstruction with path-space light transport and a simple ray marching technique to extract detailed, dense sets of surface points and normals of hidden scenes.We demonstrate the robustness of our method to consistently reconstruct geometry and albedo, even under significant noise levels

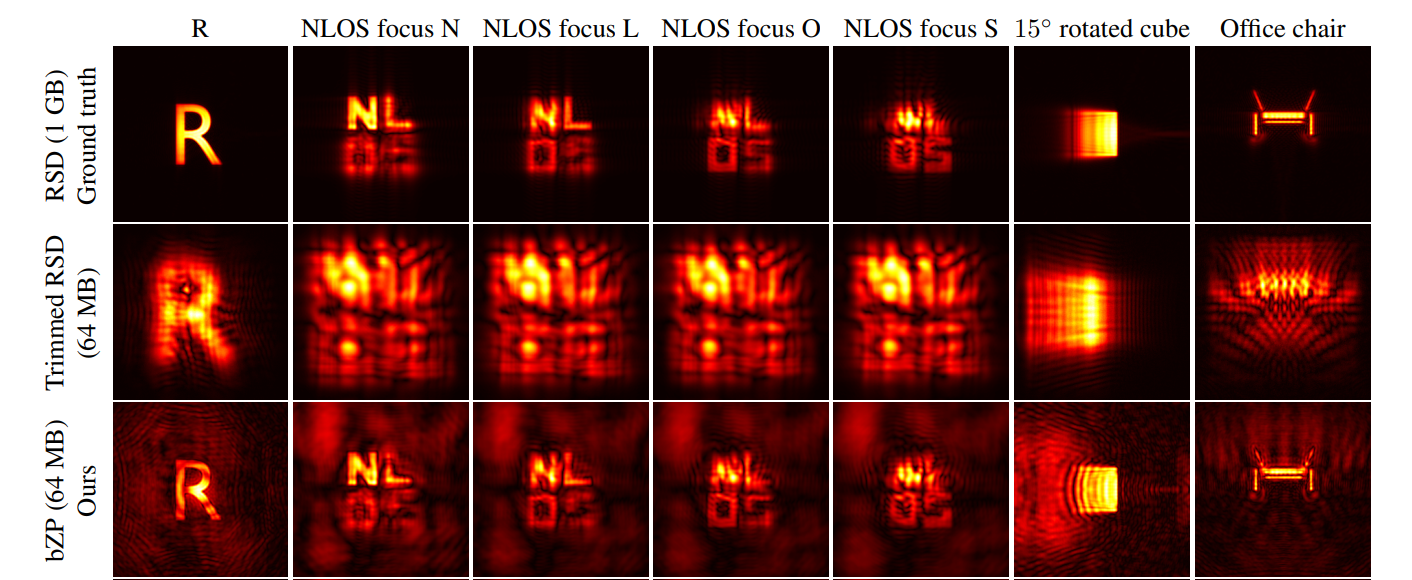

Zone Plate Virtual Lenses for Memory-Constrained NLOS Imaging

Pablo Luesia-Lahoz, Diego Gutierrez, Adolfo Muñoz

IEEE ICASSP 2023

Abstract: The recently introduced Phasor Fields framework for non-line-of-sight imaging allows to image hidden scenes by treating a relay surface as a virtual camera. It formulates the problem as a diffractive wave propagation, solved by the Rayleigh–Sommerfeld diffraction (RSD) integral. Efficient Phasor Fields implementations employ RSD-based kernels to propagate waves from parallel planes by means of 2D convolutions. However, the kernel storage requisites are prohibitive, hampering the integration of these techniques in memory-constrained devices and applications like car safety.Instead of relying on expensive RSD kernels, we propose the use of alternative virtual lenses to focus on the incoming phasor field and image hidden scenes. In particular, we propose using zone plates (ZP), which require significant less memory. As our results show, our ZP virtual lenses allow us to obtain reasonable reconstructions of the hidden scene, offering an attractive trade-off for memory-constrained devices.

Structure-Aware Parametric Representations for Time-Resolved Light Transport

Diego Royo*, Zesheng Huang*, Yun Liang, Boyan Song, Adolfo Muñoz, Diego Gutierrez, Julio Marco

Optics Letters 47(19), 2022

Abstract: Time-resolved illumination provides rich spatio-temporal information for applications such as accurate depth sensing or hidden geometry reconstruction, becoming a useful asset for prototyping and as input for data-driven approaches. However, time-resolved illumination measurements are high-dimensional and have a low signal-to-noise ratio, hampering their applicability in real scenarios. We propose a novel method to compactly represent time-resolved illumination using mixtures of exponentially-modified Gaussians that are robust to noise and preserve structural information. Our method yields representations two orders of magnitude smaller than discretized data, providing consistent results in applications such as hidden scene reconstruction and depth estimation, and quantitative improvements over previous approaches.

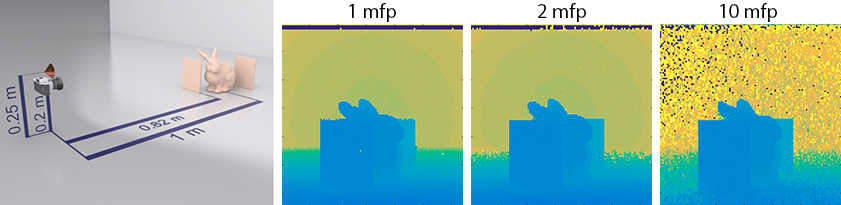

Non-line-of-sight imaging in the presence of scattering media

Pablo Luesia-Lahoz*, Miguel Crespo*, Adrian Jarabo, Albert Redo-Sanchez

Optics Letters 47(15), 2022

Abstract: Non-line-of-sight (NLOS) imaging aims to reconstruct partially or completely occluded scenes. Recent approaches have demonstrated high-quality reconstructions of complex scenes with arbitrary reflectance, occlusions, and significant multi-path effects. However, previous works focused on surface scattering only, which reduces the generality in more challenging scenarios such as scenes submerged in scattering media. In this work, we investigate current state-of-the-art NLOS imaging methods based on phasor fieldsto reconstruct scenes submerged in scattering media. We empirically analyze the capability of phasor fields in reconstructing complex synthetic scenes submerged in thick scattering media. We also apply the method to real scenes, showing that it performs similarly to recent diffuse optical tomography methods.

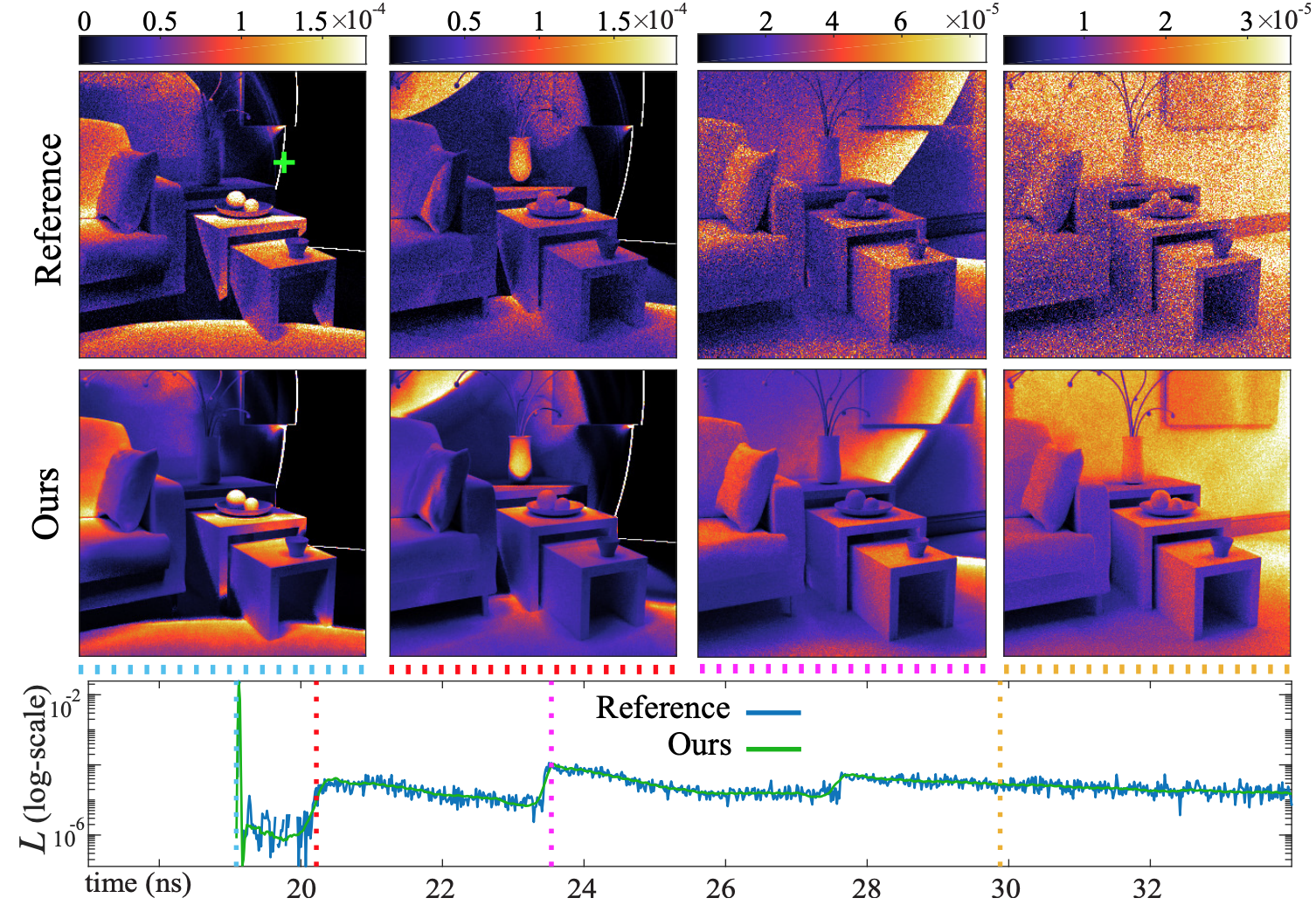

Non-line-of-sight transient rendering

Diego Royo, Jorge Garcia, Adolfo Muñoz, Adrián Jarabo

Computers & Graphics, Vol. 107 (CEIG), 2022

Abstract: The capture and analysis of light in flight, or light in transient state, has enabled applications such as range imaging, reflectance estimation and especially non-line-of-sight (NLOS) imaging. For this last case, hidden geometry can be reconstructed using time-resolved measurements of indirect diffuse light emitted by a laser. Transient rendering is a key tool for developing such new applications, significantly more challenging than its steady-state counterpart. In this work, we introduce a set of simple yet effective subpath sampling techniques targeting transient light transport simulation in occluded scenes. We analyze the usual capture setups of NLOS scenes, where both the camera and light sources are focused on particular points in the scene. Also, the hidden geometry can be difficult to sample using conventional techniques. We leverage that configuration to reduce the integration path space. We implement our techniques in a modified version of Mitsuba 2 adapted for transient light transport, allowing us to support parallelization, polarization, and differentiable rendering.

Virtual light transport matrices for non-line-of-sight imaging

Julio Marco, Adrian Jarabo, Ji Hyun Nam, Xiaochun Liu, Miguel Ángel Cosculluela, Andreas Velten, Diego Gutierrez

Proceeding of International Conference on Computer Vision (ICCV) 2021 (oral)

Abstract: The light transport matrix (LTM) is an instrumental tool in line-of-sight (LOS) imaging, describing how light interacts with the scene and enabling applications such as relighting or separation of illumination components. We introduce a framework to estimate the LTM of non-line-of-sight (NLOS) scenarios, coupling recent virtual forward light propagation models for NLOS imaging with the LOS light transport equation. We design computational projector-camera setups, and use these virtual imaging systems to estimate the transport matrix of hidden scenes. We introduce the specific illumination functions to compute the different elements of the matrix, overcoming the challenging wide-aperture conditions of NLOS setups. Our NLOS light transport matrix allows us to (re)illuminate specific locations of a hidden scene, and separate direct, first-order indirect, and higher-order indirect illumination of complex cluttered hidden scenes, similar to existing LOS techniques.

Compression and Denoising of Transient Light Transport

Yun Liang, Mingqin Chen, Zesheng Huang, Diego Gutierrez, Adolfo Muñoz, Julio Marco

Optics Letters, Vol. 45(7), 2020

Abstract: Exploiting temporal information of light propagation captured at ultra-fast frame rates has enabled applications such as reconstruction of complex hidden geometry, or vision through scattering media. However, these applications require high-dimensional and highresolution transport data which introduces significant performance and storage constraints. Additionally, due to different sources of noise in both captured and synthesized data, the signal becomes significantly degraded over time, compromising the quality of the results. In this work we tackle these issues by proposing a method that extracts meaningful sets of features to accurately represent time-resolved light transport data. Our method reduces the size of time-resolved transport data up to a factor of 32, while significantly mitigating variance in both temporal and spatial dimensions.

On the Effect of Reflectance on Phasor Field Non-Line-of-Sight imaging

Ibón Guillén, Xiaochun Liu, Andreas Velten, Diego Gutierrez, Adrian Jarabo

IEEE ICASSP 2020

Abstract: Non-line-of-sight (NLOS) imaging aims to visualize occluded scenes by exploiting indirect reflections on visible surfaces. Previous methods approach this problem by inverting the light transport on the hidden scene, but are limited to isolated, diffuse objects. The recently introduced phasor fields framework computationally poses NLOS reconstruction as a virtual line-of-sight (LOS) problem, lifting most assumptions about the hidden scene. In this work we complement recent theoretical analysis of phasor field-based reconstruction, by empirically analyzing the effect of reflectance of the hidden scenes on reconstruction. We experimentally study the reconstruction of hidden scenes composed of objects with increasingly specular materials. Then, we evaluate the effect of the virtual aperture size on the reconstruction, and establish connections between the effect of these two different dimensions on the results. We hope our analysis helps to characterize the imaging capabilities of this promising new framework, and foster new NLOS imaging modalities.

Non-Line-of-Sight Imaging using Phasor Field Virtual Wave Optics

Xiaochun Liu, Ibón Guillén, Marco La Manna, Ji Hyun Nam, Syed Azer Reza, Toan Huu Le, Adrian Jarabo, Diego Gutierrez, Andreas Velten

Nature, Vol. 572, Issue 7771, 2019

Abstract: Non-line-of-sight imaging allows objects to be observed when partially or fully occluded from direct view, by analysing indirect diffuse reflections off a secondary relay surface. Despite many potential applications, existing methods lack practical usability because of limitations including the assumption of single scattering only, ideal diffuse reflectance and lack of occlusions within the hidden scene. By contrast, line-of-sight imaging systems do not impose any assumptions about the imaged scene, despite relying on the mathematically simple processes of linear diffractive wave propagation. Here we show that the problem of non-line-of-sight imaging can also be formulated as one of diffractive wave propagation, by introducing a virtual wave field that we term the phasor field. Non-line-of-sight scenes can be imaged from raw time-of-flight data by applying the mathematical operators that model wave propagation in a conventional line-of-sight imaging system. Our method yields a new class of imaging algorithms that mimic the capabilities of line-of-sight cameras. To demonstrate our technique, we derive three imaging algorithms, modelled after three different line-of-sight systems. These algorithms rely on solving a wave diffraction integral, namely the Rayleigh–Sommerfeld diffraction integral. Fast solutions to Rayleigh–Sommerfeld diffraction and its approximations are readily available, benefiting our method. We demonstrate non-line-of-sight imaging of complex scenes with strong multiple scattering and ambient light, arbitrary materials, large depth range and occlusions. Our method handles these challenging cases without explicitly inverting a light-transport model. We believe that our approach will help to unlock the potential of non-line-of-sight imaging and promote the development of relevant applications not restricted to laboratory conditions.

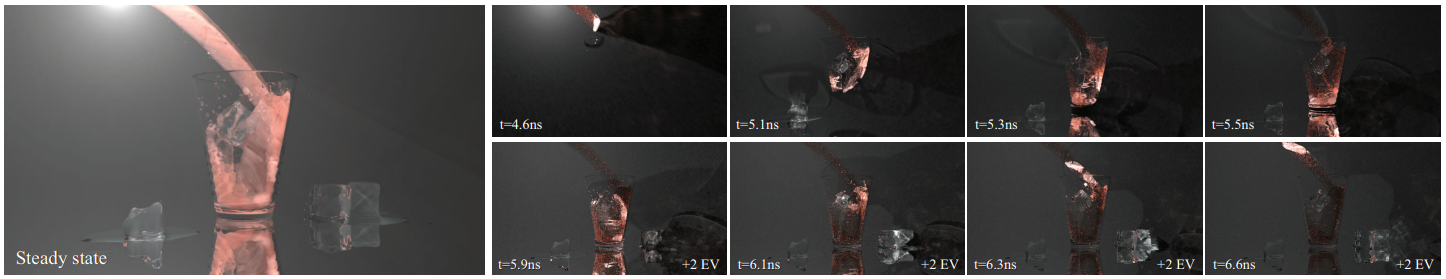

Progressive Transient Photon Beams

Julio Marco, Ibón Guillén, Wojciech Jarosz, Diego Gutierrez, Adrian Jarabo

Computer Graphics Forum, Vol. 38(6), 2019

Abstract: In this work we introduce a novel algorithm for transient rendering in participating media. Our method is consistent, robust, and is able to generate animations of time-resolved light transport featuring complex caustic light paths in media. We base our method on the observation that the spatial continuity provides an increased coverage of the temporal domain, and generalize photon beams to transient-state. We extend stead-state photon beam radiance estimates to include the temporal domain. Then, we develop a progressive variant of our approach which provably converges to the correct solution using finite memory by averaging independent realizations of the estimates with progressively reduced kernel bandwidths. We derive the optimal convergence rates accounting for space and time kernels, and demonstrate our method against previous consistent transient rendering methods for participating media.

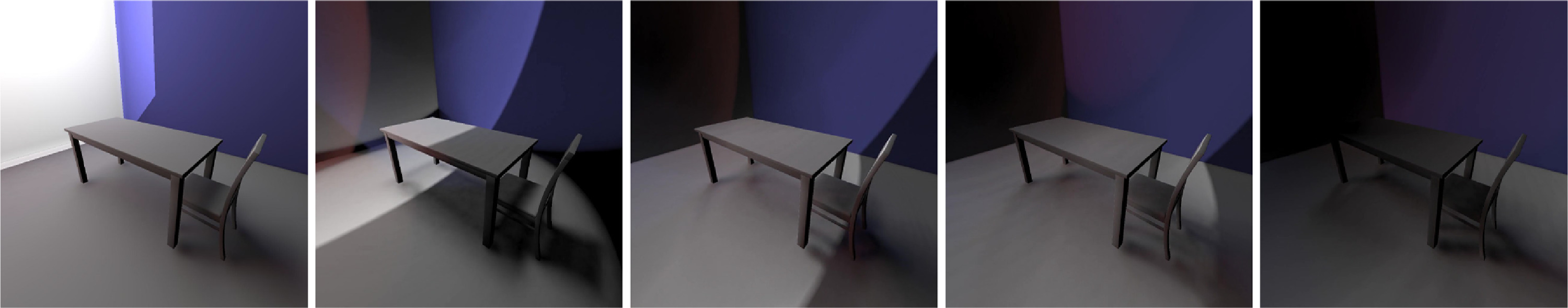

Transient Instant Radiosity for Efficient Time-Resolved Global Illumination

Xian Pan, Victor Arellano, Adrián Jarabo

Computers & Graphics, Vol. 83, 2019

Abstract: Over the last decade, transient imaging has had a major impact in the area of computer graphics and computer vision. The hability of analyzing light propagation at picosecond resolution has enabled a variety of applications such as non-line of sight imaging, vision through turbid media, or visualization of light in motion. However, despite the improvements in capture at such temporal resolution, existing rendering methods are still very time-consuming, requiring a large number of samples to converge to noise-free solutions, therefore limiting the applicability of such simulations. In this work, we generalize instant radiosity, which is very suitable for parallelism in the GPU, to transient state. First, we derive it from the transient path integral, including propagation and scattering delays. Then, we propose an efficient implemention on the GPU, and demonstrate interactive transient rendering with hundreds of thousands of samples per pixel to produce noiseless time-resolved renders.

A Dataset for Benchmarking Time-Resolved Non-Line-Of-Sight Imaging

Miguel Galindo, Julio Marco, Matthew O'Toole, Gordon Wetzstein, Diego Gutierrez, Adrian Jarabo

ICCP 2019 Posters

Abstract: Transient imaging has made it possible to look around corners by exploiting information from time-resolved indirect illumination captured at a visible surface. While most previous works have only demonstrated this technique in limited and controlled scenarios, recent works have proven that this technology can be applied to image very complex occluded scenes. We present a public dataset of synthetic time-resolved Non-Line-of-Sight (NLOS) scenes to validate new research, benchmark reconstruction methods, and serve as training data for imaging methods based on machine learning. Our dataset is an order of magnitude larger than any other available data. These scenes include an increasing level of complexity, from simple isolated objects with varying complexity in geometry and reflectance, to scenes with significant multibounce, and to scenes simulating real-world indoor and outdoor scenarios. With this dataset, we hope to boost research on NLOS imaging and to help bringing it closer to real-world applications.

Adaptive polarization-difference transient imaging for depth estimation in scattering media

Rihui Wu*, Adrian Jarabo*, Jinli Suo, Feng Dai, Yongdong Zhang, Qionghai Dai, Diego Gutierrez

Optics Letters, Vol. 43(6), 2018

Abstract: Introducing polarization into transient imaging improves depth estimation in participating media, by discriminating reflective from scattered light transport, and calculating depth from the former component only. Previous works have leveraged this approach, under the assumption of uniform polarization properties. However, the orientation and intensity of polarization inside scattering media is non-uniform, both in the spatial and temporal domains. As a result of this simplifying assumption, the accuracy of the estimated depth worsens significantly as the optical thickness of the medium increases. In this letter, we introduce a novel adaptive polarization-difference method for transient imaging, taking into account the nonuniform nature of polarization in scattering media. Our results demonstrate a superior performance for impulsebased transient imaging over previous unpolarized or uniform approaches.

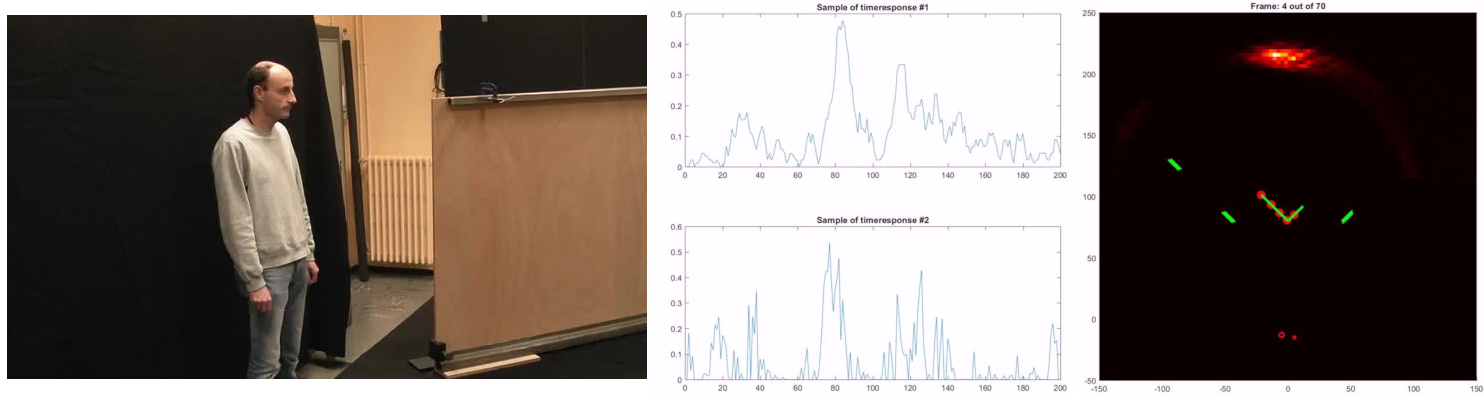

Sensing around the next corner

Martin Laurenzis, Andreas Velten, Ji Hyun Nam, Marco La Manna, Mohit Gupta, Diego Gutierrez, Adrian Jarabo, Mauro Buttafava, Alberto Tosi

SPIE DCS, Computational Imaging III (Oral), 2018

Abstract: In recent time, non-line-of-sight sensing has been demonstrated to reconstruct the shape or track the position of objects around a corner, by analyzing the photon flux coming from a remote surface in both the spatial and temporal domains. A common scenario, where a light pulse is reflected off a relay surface, the occluded target, and again off a relay surface back to the sensor unit, can localize the hidden source of the reflection around a single corner. However, higher-order reflections are neglected, limiting the reconstruction to three-bounce information.

DeepToF: Off-the-Shelf Real-time Correction of Multipath Interference in Time-of-Flight Imaging

Julio Marco, Quercus Hernandez, Adolfo Muñoz, Yue Dong, Adrian Jarabo, Min Kim, Xin Tong, Diego Gutierrez

ACM Transactions on Graphics, Vol. 36(6) (SIGGRAPH Asia 2017)

Abstract: Time-of-flight (ToF) imaging has become a widespread technique for depth estimation, allowing affordable off-the-shelf cameras to provide depth maps in real time. However, multipath interference (MPI) resulting from indirect illumination significantly degrades the captured depth. Most previous works have tried to solve this problem by means of complex hardware modifications or costly computations. In this work we avoid these approaches, and propose a new technique that corrects errors in depth caused by MPI that requires no camera modifications, and corrects depth in just 10 milliseconds per frame. By observing that most MPI information can be expressed as a function of the captured depth, we pose MPI removal as a convolutional approach, and model it using a convolutional neural network. In particular, given that the input and output data present similar structure, we base our network in an autoencoder, which we train in two stages: first, we use the encoder (convolution filters) to learn a suitable basis to represent corrupted range images; then, we train the decoder (deconvolution filters) to correct depth from the learned basis from synthetically generated scenes. This approach allows us to tackle the lack of reference data, by using a large-scale captured training set with corrupted depth to train the encoder, and a smaller synthetic training set with ground truth depth to train the corrector stage of the network, which we generate by using a physically-based, time-resolved rendering. We demonstrate and validate our method on both synthetic and real complex scenarios, using an off-the-shelf ToF camera, and with only the captured incorrect depth as input.

Recent Advances in Transient Imaging: A Computer Graphics and Vision Perspective

Adrian Jarabo, Belen Masia, Julio Marco, Diego Gutierrez

Visual Informatics, Vol. 1(1), 2017

Abstract: Transient imaging has recently made a huge impact in the computer graphics and computer vision fields. By capturing, reconstructing, or simulating light transport at extreme temporal resolutions, researchers have proposed novel techniques to show movies of light in motion, see around corners, detect objects in highly-scattering media, or infer material properties from a distance, to name a few. The key idea is to leverage the wealth of information in the temporal domain at the pico or nanosecond resolution, information usually lost during the capture-time temporal integration. This paper presents recent advances in this field of transient imaging from a graphics and vision perspective, including capture techniques, analysis, applications and simulation.

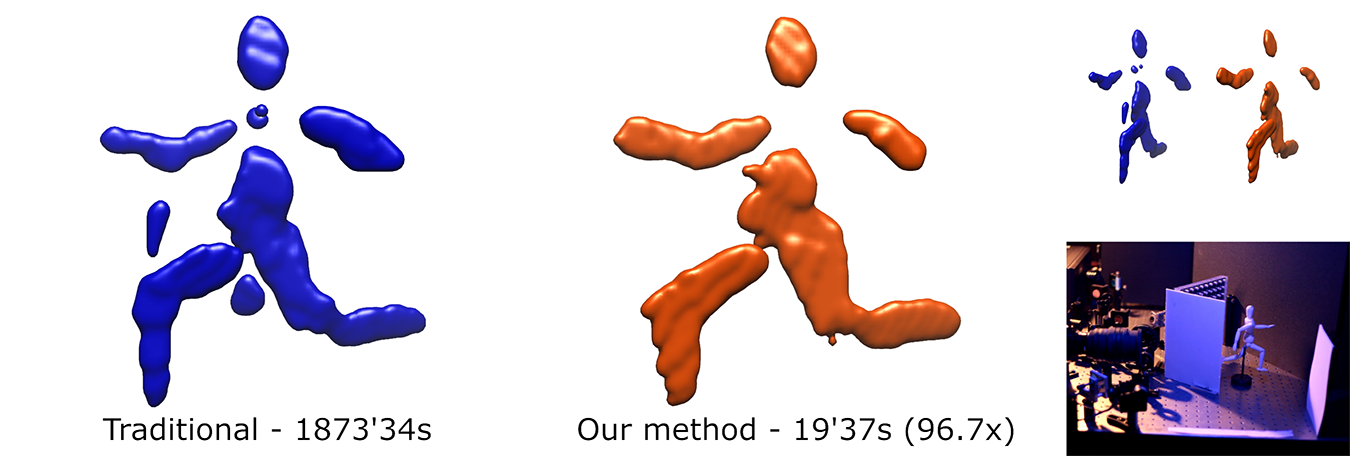

Fast Back-Projection for Non-Line of Sight Reconstruction

Victor Arellano, Diego Gutierrez, Adrian Jarabo

SIGGRAPH 2017 Poster

Abstract: Recent works have demonstrated non-line of sight (NLOS) reconstruction by using the time-resolved signal frommultiply scattered light. These works combine ultrafast imaging systems with computation, which back-projects the recorded space-time signal to build a probabilistic map of the hidden geometry. Unfortunately, this computation is slow, becoming a bottleneck as the imaging technology improves. In this work, we propose a new back-projection technique for NLOS reconstruction, which is up to a thousand times faster than previous work, with almost no quality loss. We base on the observation that the hidden geometry probability map can be built as the intersection of the three-bounce space-time manifolds defined by the light illuminating the hidden geometry and the visible point receiving the scattered light from such hidden geometry. This allows us to pose the reconstruction of the hidden geometry as the voxelization of these space-time manifolds, which has lower theoretic complexity and is easily implementable in the GPU. We demonstrate the efficiency and quality of our technique compared against previous methods in both captured and synthetic data

Transient Photon Beams

Julio Marco, Wojciech Jarosz, Diego Gutierrez, Adrian Jarabo

Spanish Computer Graphics Conference (CEIG), 2017

Abstract: Recent advances on transient imaging and their applications have opened the necessity of forward models that allow precise generation and analysis of time-resolved light transport data. However, traditional steady-state rendering techniques are not suitable for computing transient light transport due to the aggravation of the inherent Monte Carlo variance over time. These issues are specially problematic in participating media, which demand high number of samples to achieve noise-free solutions. We address this problem by presenting the first photon-based method for transient rendering of participating media that performs density estimations on time-resolved precomputed photon maps. We first introduce the transient integral form of the radiative transfer equation into the computer graphics community, including transient delays on the scattering events. Based on this formulation we leverage the high density and parameterized continuity provided by photon beams algorithms to present a new transient method that allows to significantly mitigate variance and efficiently render participating media effects in transient state.

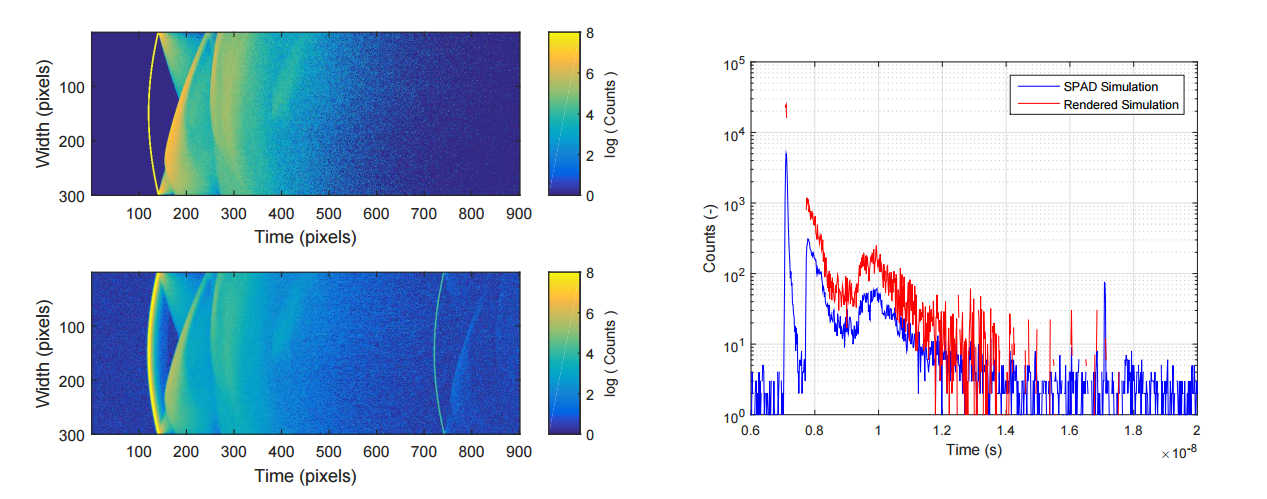

A Computational Model of a Single-Photon Avalanche Diode Sensor for Transient Imaging

Quercus Hernandez, Diego Gutierrez, Adrian Jarabo

Technical report (arXiv:1703.02635), 2017

Abstract: Single-Photon Avalanche Diodes (SPAD) are affordable photodetectors, capable to collect extremely fast low-energy events, due to their single-photon sensibility. This makes them very suitable for time-of-flight-based range imaging systems, allowing to reduce costs and power requirements, without sacrifizing much temporal resolution. In this work we describe a computational model to simulate the behaviour of SPAD sensors, aiming to provide a realistic camera model for time-resolved light transport simulation, with applications on prototyping new reconstructions techniques based on SPAD time-of-flight data. Our model accounts for the major effects of the sensor on the incoming signal. We compare our model against real-world measurements, and apply it to a variety of scenarios, including complex multiply-scattered light transport.

A Framework for Transient Rendering

Adrian Jarabo, Julio Marco, Adolfo Muñoz, Raul Buisan, Wojciech Jarosz and Diego Gutierrez

ACM Transactions on Graphics, Vol.33(6) (SIGGRAPH Asia 2014)

Abstract: Recent advances in ultra-fast imaging have triggered many promising applications in graphics and vision, such as capturing transparent objects, estimating hidden geometry and materials, or visualizing light in motion. There is, however, very little work regarding the effective simulation and analysis of transient light transport, where the speed of light can no longer be considered infinite. We first introduce the transient path integral framework, formally describing light transport in transient state. We then analyze the difficulties arising when considering the light's time-of-flight in the simulation (rendering) of images and videos. We propose a novel density estimation technique that allows reusing sampled paths to reconstruct time-resolved radiance, and devise new sampling strategies that take into account the distribution of radiance along time in participating media. We then efficiently simulate time-resolved phenomena (such as caustic propagation, fluorescence or temporal chromatic dispersion), which can help design future ultra-fast imaging devices using an analysis-by-synthesis approach, as well as to achieve a better understanding of the nature of light transport.

Decomposing Global Light Transport using Time of Flight Imaging

D. Wu, A. Velten, M. O'Toole, B. Masia, A. Agrawal, Q. Dai, R. Raskar

Intnl. J. on Computer Vision, Vol. 107(2). 2014

Abstract: Global light transport is composed of direct and indirect components. In this paper, we take the first steps toward analyzing light transport using the high temporal resolution information of time of flight (ToF) images. With pulsed scene illumination, the time profile at each pixel of these images separates different illumination components by their finite travel time and encodes complex interactions between the incident light and the scene geometry with spatially-varying material properties. We exploit the time profile to decompose light transport into its constituent direct, subsurface scattering, and interreflection components. We show that the time profile is well modelled using a Gaussian function for the direct and interreflection components, and a decaying exponential function for the subsurface scattering component. We use our direct, subsurface scattering, and interreflection separation algorithm for five computer vision applications: recovering projective depth maps, identifying subsurface scattering objects, measuring parameters of analytical subsurface scattering models, performing edge detection using ToF images and rendering novel images of the captured scene with adjusted amounts of subsurface scattering.

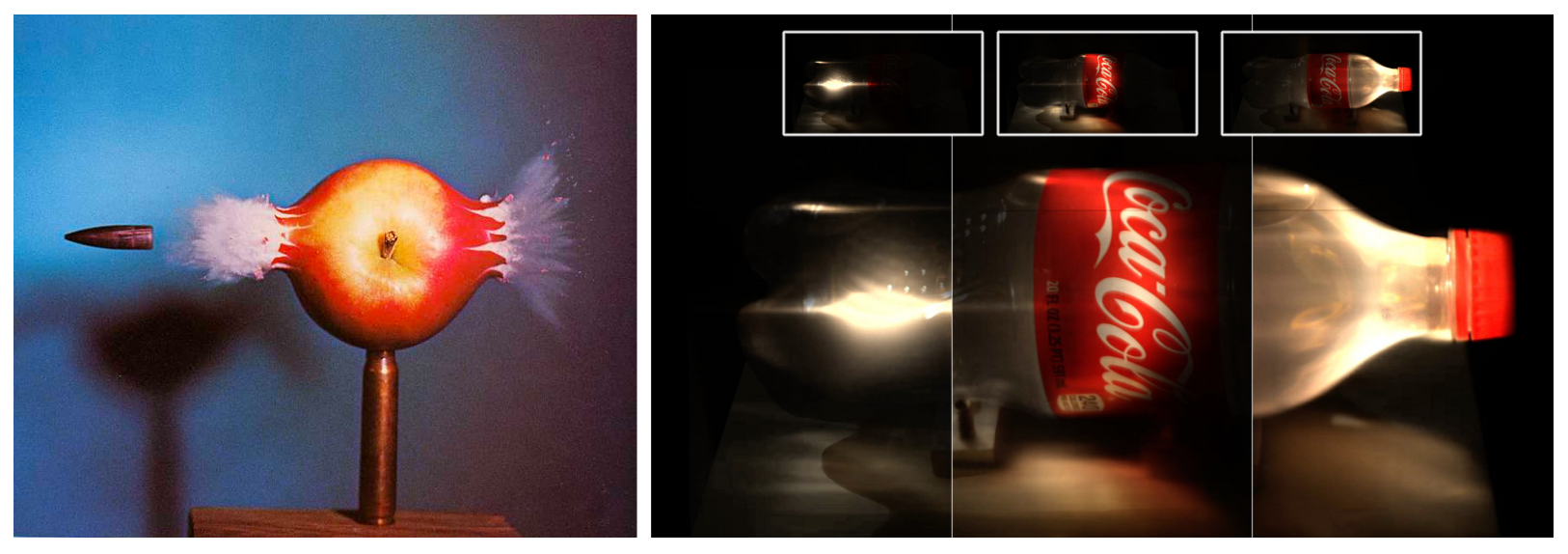

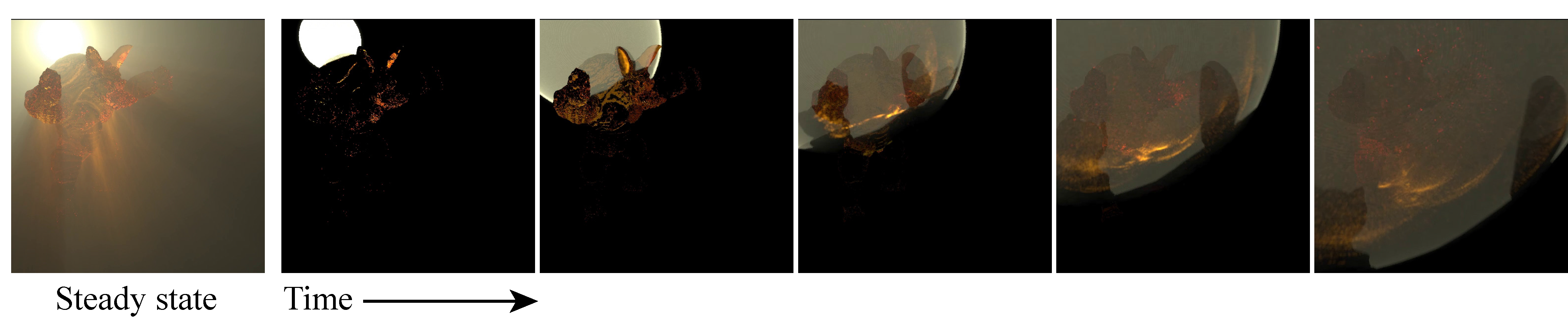

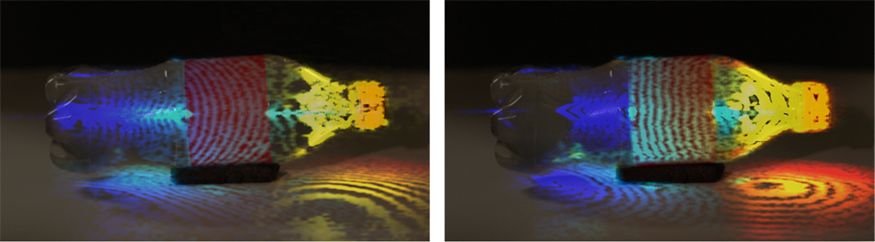

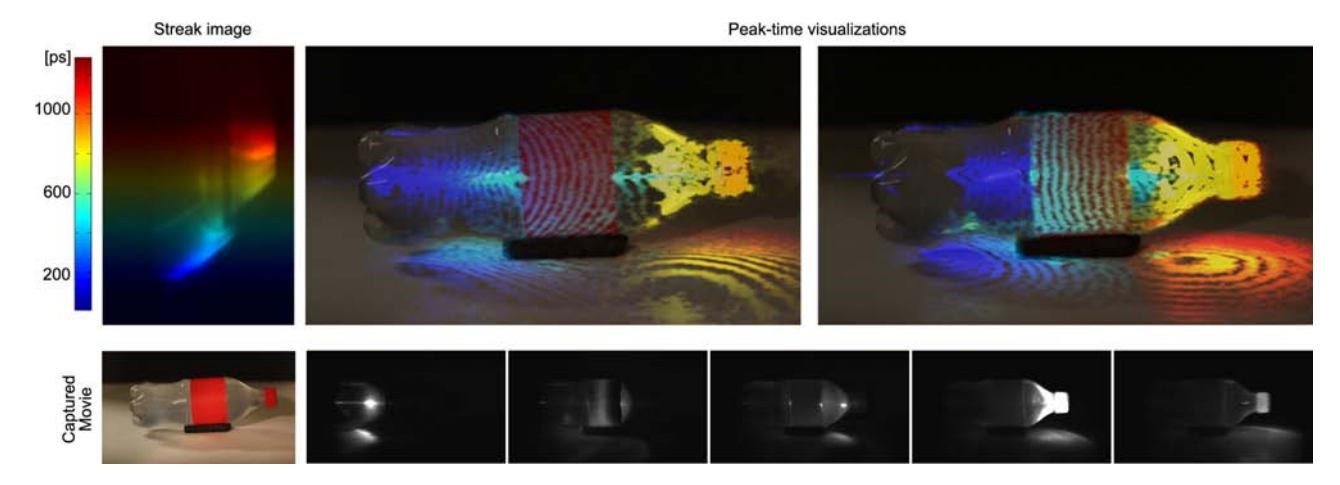

Femto-Photography: Capturing and Visualizing the Propagation of Light

A. Velten, D. Wu, A. Jarabo, B. Masia, C. Barsi, C. Joshi, E. Lawson, M. Bawendi, D. Gutierrez and R. Raskar

ACM Transactions on Graphics, Vol. 32(4) (SIGGRAPH 2013)

Abstract: We present femto-photography, a novel imaging technique to capture and visualize the propagation of light. With an effective exposure time of 1.85 picoseconds (ps) per frame, we reconstruct movies of ultrafast events at an equivalent resolution of about one half trillion frames per second. Because cameras with this shutter speed do not exist, we re-purpose modern imaging hardware to record an ensemble average of repeatable events that are synchronized to a streak sensor, in which the time of arrival of light from the scene is coded in one of the sensor's spatial dimensions. We introduce reconstruction methods that allow us to visualize the propagation of femtosecond light pulses through macroscopic scenes; at such fast resolution, we must consider the notion of time-unwarping between the camera's and the world's space-time coordinate systems to take into account effects associated with the finite speed of light. We apply our femto-photography technique to visualizations of very different scenes, which allow us to observe the rich dynamics of time-resolved light transport effects, including scattering, specular reflections, diffuse interreflections, diffraction, caustics, and subsurface scattering. Our work has potential applications in artistic, educational, and scientific visualizations; industrial imaging to analyze material properties; and medical imaging to reconstruct subsurface elements. In addition, our time-resolved technique may motivate new forms of computational photography.

Relativistic Ultrafast Rendering Using Time-of-Flight Imaging

Andreas Velten, Di Wu, Adrian Jarabo, Belen Masia, Christopher Barsi, Everett Lawson, Chinmaya Joshi, Diego Gutierrez, Ramesh Raskar

SIGGRAPH 2012 (talk)

Abstract: We present femto-photography, a novel imaging technique to capture and visualize the propagation of light. With an effective exposure time of 1.85 picoseconds (ps) per frame, we reconstruct movies of ultrafast events at an equivalent resolution of about one half trillion frames per second. Because cameras with this shutter speed do not exist, we re-purpose modern imaging hardware to record an ensemble average of repeatable events that are synchronized to a streak sensor, in which the time of arrival of light from the scene is coded in one of the sensor's spatial dimensions. We introduce reconstruction methods that allow us to visualize the propagation of femtosecond light pulses through macroscopic scenes; at such fast resolution, we must consider the notion of time-unwarping between the camera's and the world's space-time coordinate systems to take into account effects associated with the finite speed of light. We apply our femto-photography technique to visualizations of very different scenes, which allow us to observe the rich dynamics of time-resolved light transport effects, including scattering, specular reflections, diffuse interreflections, diffraction, caustics, and subsurface scattering. Our work has potential applications in artistic, educational, and scientific visualizations; industrial imaging to analyze material properties; and medical imaging to reconstruct subsurface elements. In addition, our time-resolved technique may motivate new forms of computational photography.