News

- December, 2022: We will be presenting this work at SIGGRAPH Asia 2022.

- March, 2022: This work has obtained the IEEEVR'22 Best Journal Track Paper Award!

- February, 2022: Video presentation available (see Presentation video).

- January, 2022: Paper accepted to TVCG (Proc. IEEE VR 2022) (see Downloads).

- April, 2021: Demo code available (see Code).

- March, 2021: Supplementary video available (see Video).

- March, 2021: Paper and supplementary materials available in arXiv (see Downloads).

- March, 2021: Web launched.

Supplementary Video

Presentation Video (IEEE VR 2022)

Abstract

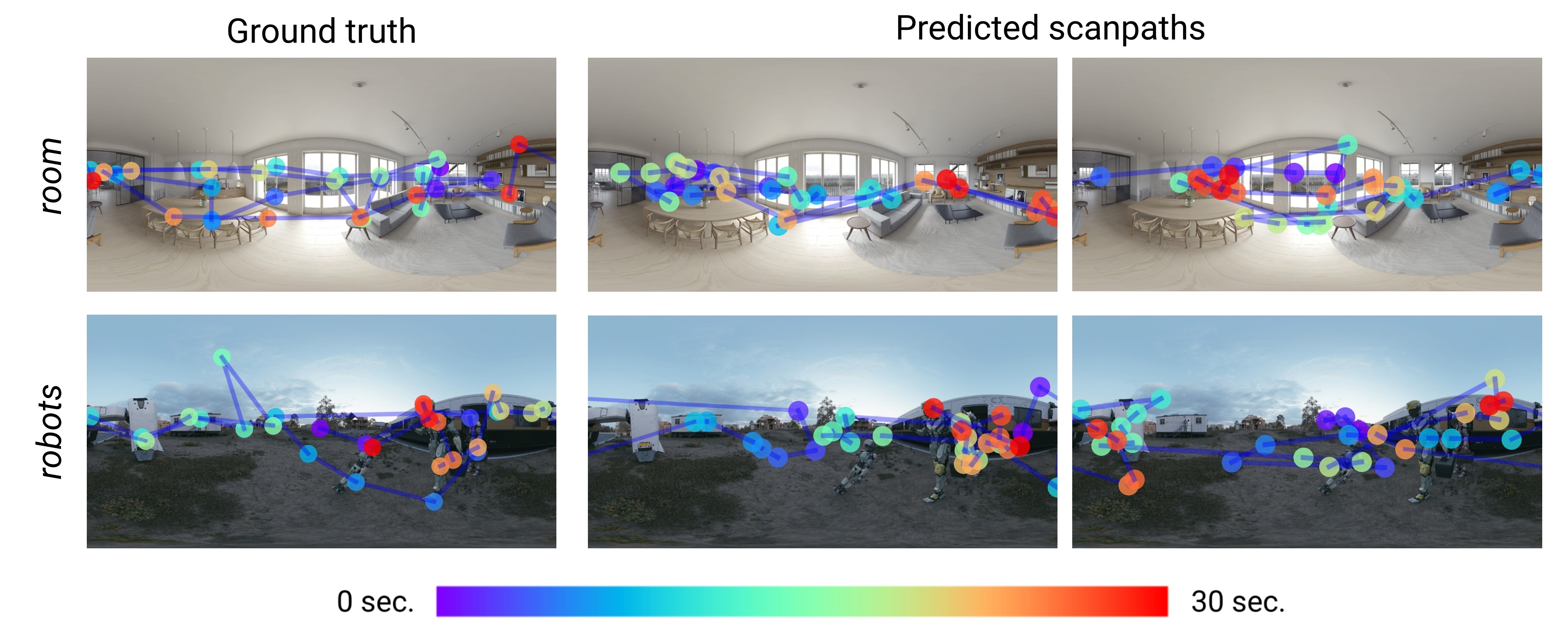

Understanding and modeling the dynamics of human gaze behavior in 360º environments is a key challenge in computer vision and virtual reality. Generative adversarial approaches could alleviate this challenge by generating a large number of possible scanpaths for unseen images. Existing methods for scanpath generation, however, do not adequately predict realistic scanpaths for 360º images. We present ScanGAN360, a new generative adversarial approach to address this challenging problem. Our network generator is tailored to the specifics of 360º images representing immersive environments. Specifically, we accomplish this by leveraging the use of a spherical adaptation of dynamic-time warping as a loss function and proposing a novel parameterization of 360º scanpaths. The quality of our scanpaths outperforms competing approaches by a large margin and is almost on par with the human baseline. ScanGAN360 thus allows fast simulation of large numbers of virtual observers, whose behavior mimics real users, enabling a better understanding of gaze behavior and novel applications in virtual scene design.

Downloads

Code

You can find the code and model for ScanGAN360 in our GitHub repository.

Bibtex

Related Work

- 2020: Panoramic convolutions for 360º single-image saliency prediction